AHV uses Open vSwitch (OVS) to connect the CVM, the hypervisor, and user VMs to each other and to the physical network on each node. The CVM manages the OVS inside the AHV host. Do not allow any external tool to modify the OVS. Open vSwitch (OVS) is an open source software switch designed to work in a multi-server virtualization environment. In AHV, the OVS behaves like a layer-2 learning switch that maintains a MAC address table. The hypervisor host and VMs connect to virtual ports on the switch. AHV exposes many popular OVS features, such as VLAN tagging, load balancing, and link aggregation control protocol (LACP).

Each AHV server maintains an OVS instance, managed as a single logical switch through Prism. A Nutanix cluster can tolerate multiple simultaneous failures because it maintains a set replication factor and offers features like block and rack awareness. However, this level of resilience requires a highly available, redundant network between the cluster’s nodes. Protecting the cluster’s read and write storage capabilities also requires highly available connectivity between nodes. Even with intelligent data placement strategies, if network connectivity between more than the allowed number of nodes breaks down, VMs on the cluster could experience write failures and enter read-only mode. To optimize I/O speed, Nutanix clusters choose to send each write to another node in the cluster. As a result, a fully populated cluster sends storage replication traffic in a full mesh, using network bandwidth between all Nutanix nodes. Because storage write latency directly correlates to the network latency between Nutanix nodes, any network latency increase adds to storage write latency.

Network view commands:

nutanix@CVM$ allssh “manage_ovs –bridge_name br0 show_uplinks”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-appctl bond/show br0-up”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl show”

nutanix@CVM$ acli

<acropolis> net.list

<acropolis> net.list_vms vlan.0

nutanix@CVM$ manage_ovs –help

nutanix@CVM$ allssh “manage_ovs show_interfaces”

nutanix@CVM$ allssh “manage_ovs –bridge_name <bridge> show_uplinks”

nutanix@CVM$ allssh “manage_ovs –bridge_name <bridge> –interfaces <interfaces> update_uplinks”

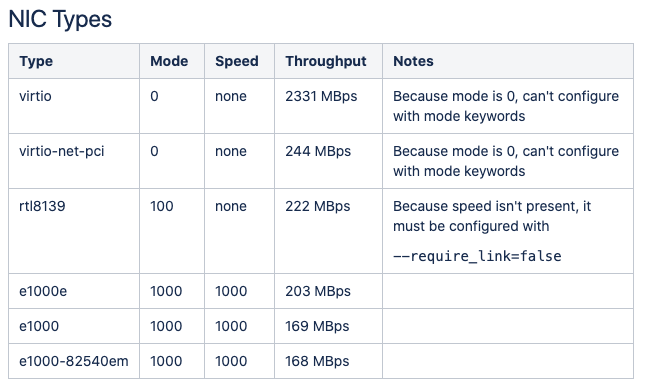

nutanix@CVM$ allssh “manage_ovs –bridge_name <bridge> –interfaces <interfaces> –require_link=false update_uplinks”

Bond configuration for 2x 10 Gb:

nutanix@CVM$ allssh “manage_ovs –bridge_name br1 create_single_bridge”

nutanix@CVM$ manage_ovs –bridge_name br0 –bond_name br0-up –interfaces 10g update_uplinks

nutanix@CVM$ manage_ovs –bridge_name br1 –bond_name br1-up –interfaces 1g update_uplinks

nutanix@cvm$ acli net.create br1_vlan99 vswitch_name=br1 vlan=99

Bond configuration for 4x 10 Gb:

nutanix@CVM$ allssh “manage_ovs –bridge_name br1 create_single_bridge”

nutanix@CVM$ allssh “manage_ovs –bridge_name br2 create_single_bridge”

nutanix@CVM$ manage_ovs –bridge_name br0 –bond_name br0-up –interfaces eth4,eth5 update_uplinks

nutanix@CVM$ manage_ovs –bridge_name br1 –bond_name br1-up –interfaces eth2,eth3 update_uplinks

nutanix@CVM$ manage_ovs –bridge_name br2 –bond_name br2-up –interfaces eth0,eth1 update_uplinks

nutanix@cvm$ acli net.create br1_vlan99 vswitch_name=br1 vlan=99

nutanix@cvm$ acli net.create br2_vlan100 vswitch_name=br2 vlan=100

+ Load balance view command

nutanix@CVM$ ssh root@192.168.5.1 “ovs-appctl bond/show”

+ Load balance active-backup configuration

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up bond_mode=active-backup”

Load balance balance-slb configuration:

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up bond_mode=balance-slb”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up other_config:bond-rebalance-interval=30000”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-appctl bond/show br0-up ”

Load balance balance-tcp and LACP configuration:

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up other_config:lacp-fallback-ab=true”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up other_config:lacp-time=fast”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up lacp=active”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0-up bond_mode=balance-tcp”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-appctl bond/show br0-up ”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-appctl lacp/show br0-up ”

CVM and AHV host tagged VLAN configuration:

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0 tag=10”

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl list port br0”

nutanix@CVM$ change_cvm_vlan 10

nutanix@CVM$ change_cvm_vlan 0

nutanix@CVM$ ssh root@192.168.5.1 “ovs-vsctl set port br0 tag=0”

High availability options:

nutanix@CVM$ acli ha.update num_reserved_hosts=X

nutanix@CVM$ acli ha.update reservation_type=kAcropolisHAReserveSegments

nutanix@CVM$ acli vm.update <VM Name> ha_priority=-1

nutanix@CVM$ acli vm.create <VM Name> ha_priority=-1

+ Live migration bandwidth limits

nutanix@CVM$ acli vm.migrate slow-migrate-VM1 bandwidth_mbps=100 host=10.1x.1x.11 live=yes

+ Viewing NUMA topology

nutanix@cvm$ allssh “virsh capabilities | egrep ‘cell id|cpus num|memory unit'”

+ Verifying transparent huge pages

nutanix@CVM$ ssh root@192.168.5.1 “cat /sys/kernel/mm/transparent_hugepage/enabled”

[always] madvise never

The below command retrieves the dropped packets information for the vNIC:

nutanix@cvm$ arithmos_cli master_get_time_range_stats entity_type=<virtual_nic> entity_id=<virtaul_nic_id> field_name=<stat_name> start_time_usecs=<starttime> end_time_usecs=<endtime>

nutanix@cvm$ ncli vm list | grep -B2 WinServer

Id : 000545db-3fc1-90a4-4add-002590c84bb8::2f5ab0e8-a843-4c29-b5b5-c142dea84db5

Uuid : 2f5ab0e8-a843-4c29-b5b5-c142dea84db5

Name : Jin-WinServer

ncli> network list-vm-nics vm-id=2f5ab0e8-a843-4c29-b5b5-c142dea84db5

VM Nic ID : 000545db-3fc1-90a4-4add-002590c84bb8::2f5ab0e8-a843-4c29-b5b5-c142dea84db5:50:6b:8d:ed:c7:c8

Name : tap6

Adapter Type : ethernet

VM ID : 000545db-3fc1-90a4-4add-002590c84bb8::2f5ab0e8-a843-4c29-b5b5-c142dea84db5

MAC Address : 50:6b:8d:ed:c7:c8

IPv4 Addresses : 10.xx.xx.xx

MTU In Bytes : 1500

Associated Host Nic Ids : 000545db-3fc1-90a4-4add-002590c84bb8::5:eth3

Stats :

received_bytes : 52160

transmitted_pkts : 8

dropped_transmitted_pkts : 0

transmitted_bytes : 448

received_rate_kBps : 1

error_transmitted_pkts : 0

dropped_received_pkts : 0

error_received_pkts : 0

transmitted_rate_kBps : 0

received_pkts : 739

nutanix@cvm$ date -d”20170310 08:00:00 UTC” +%s

1489132800

nutanix@cvm$ date -d”20170313 08:00:00 UTC” +%s

1489392000

nutanix@cvm$ arithmos_cli master_get_time_range_stats entity_type=virtual_nic entity_id=2f5ab0e8-a843-4c29-b5b5-c142dea84db5:50:6b:8d:ed:c7:c8 field_name=network.dropped_received_pkts start_time_usecs=1489132800000000 end_time_usecs=1489392000000000

How to know which UVM is using which tap interface and fetching the information about the configuration:

+ Run “virsh list –title” on the AHV host to map the name of the UVM with the UUID

[root@Onyxia-2 ~]# virsh list –all

Id Name State

—————————————————-

1 NTNX-Onyxia-2-CVM running

135 3c7d7287-e410-4424-b544-d11e9f8d184d running

Let’s use the example for 135

+ The following command will show “which tap interface has been created for this UVM”

[root@Onyxia-2 ~]# virsh domiflist 135

Interface Type Source Model MAC

——————————————————-

Tap net – virtio 50:6b:8d:69:38:67

Here, we have tap3 created on the ovs switch on this AHV host i.e. tap interfaces are ephemeral interfaces which are created only for the VM which is running i.e. if a VM is restarted then it will take a new tap interface id so, we may need to recheck the tap id for the corresponding UVM

+ Now, we can check the tap3 configuration on the ovs switch i.e. by running the following command

[root@Onyxia-2 ~]# ovs-vsctl show

— limited data shown —

Bridge “br0”

Port “br0-up”

Interface “eth2”

Interface “eth3”

Bridge “br0.local”

Port “tap3”

tag: 102

Interface “tap3”

ovs_version: “2.5.2”

From the above output we can now verify that the “tap3” interface is created on bridge br0.local and it has a vlan tag of 102. So, all the packets leaving the physical switch will have the packets tagged with vlan 102.

To allow the traffic on the upstream switch, we would need to enable trunking and enable that vlan on the switch port so that traffic can go out.

If we are experiencing a networking issue for a specific UVM then we can start our troubleshooting from-

+ checking the configuration of the “tap” ports on the ovs switch i.e. check for the “vlans” on the ovs switch (if a vlan is missing or if a vlan is present then is it expected for that UVM to be on that vlan).

+ Then check if “ovs” is learning that MAC address with the specific vlan. For getting the MAC address of the UVM, follow the steps mentioned above i.e. starting with “acli vm.list”, getting the UUID for the corresponding VM, going to the specific host and run the command “virsh list –all”, then look at the “id” and run “virsh domiflist <id>” which will show you the tap information along with MAC address. Alternate approach can also be followed i.e. logging into the UVM and running “ifconfig” for Linux UVMs or “ipconfig /all” for windows UVMs.

[root@Onyxia-2 ~]# ovs-appctl fdb/show br0

port VLAN MAC Age

10 0 0c:c4:7a:c8:e7:6c 296

10 0 00:25:90:eb:f5:84 273

9 102 50:6b:8d:69:38:67 267

10 3018 00:50:56:87:c8:a2 267

The above command will show you the forwarding table of the ovs switch and you can see that the UVM in our case has a MAC address of 50:6b:8d:69:38:67 which is being learned by the ovs switch with a vlan tag of 102.

+ Once we verify this, then we are sure that the traffic is being sent out from the ovs switch and after this, we may need to troubleshoot the external networking environment i.e. upstream switch configuration. (Check if LACP is enabled, or else start with checking the MAC address table on the switches to find out the reason if MAC addresses are being learnt on the upstream switches or not).

LLDPCTL

[root@Onyxia-2 ~]# lldpctl

——————————————————————————-

LLDP neighbors:

——————————————————————————-

Interface: eth2, via: LLDP, RID: 1, Time: 97 days, 08:10:55

Chassis:

ChassisID: mac 2c:60:0c:95:9c:2c

SysName: cumulus

SysDescr: Cumulus Linux version 2.5.6 running on Intel Mohon Peak

TTL: 120

MgmtIP: 10.63.0.12

Capability: Bridge, on

Capability: Router, on

Port:

PortID: ifname swp35

PortDescr: swp35

——————————————————————————-

Interface: eth3, via: LLDP, RID: 2, Time: 97 days, 08:10:55

Chassis:

ChassisID: mac 2c:60:0c:95:ab:e0

SysName: cumulus

SysDescr: Cumulus Linux version 2.5.6 running on Intel Mohon Peak

TTL: 120

MgmtIP: 10.63.0.13

Capability: Bridge, on

Capability: Router, on

Port:

PortID: ifname swp31

PortDescr: swp31

——————————————————————————-

LLDPTCL (link layer discovery protocol) is used to discover upstream switches which are connected to the host. This is used to map which physical NIC of the node is connected to which switch port on the upstream switch. From the above example, we can confirm that “eth2” interface is connected to “swp35” interface and “eth3” interface is connected to “swp31” interface of Cumulus switch. In this scenario, these ports are connected to the same switch but in other cases, these ports can be connected to different switches as well (Port-channels would be configured between the switches).

It also helps to get additional information about the VLAN’s configured on those switchports. These VLAN’s can be configured as access/trunk and we would need to confirm from the customer i.e. if we want to send tagged or untagged traffic from the Hosts/CVMs.

Thanks you note: Logo from Nutanix and commands from notes prepared by great SREs

“Be social and share this on social media, if you feel this is worth sharing it”