VMware announced new products this year “VMware Tanzu” which is the platform that will manage ALL their Kubernetes clusters—across vSphere, VMware PKS, public clouds, managed services, packaged distributions or even DIY—from a single point of control. This sets the direction towards the Nex-Gen Applications written using Micro Services and Infrastructure is no more a blocker for the developers. Many companies tried to automate the VM provisioning for the developers with vRA or any other solutions but now it’s time to deliver the Kubernetes ready to provision Infrastructure but not the VM’s anymore. I remember the old days to work with the storage team which is now vanished with HCI (Hyper-Converged Infrastructure) implementation. It’s always good to know the basic concepts of new technologies and docker is the core technology on which Kubernetes orchestration became popular. Today let’s spend some time understanding the Docker networking concepts.

Please find the analogy which I created for the Docker networking session delivered for my team. We can’t do any magic without the physical layer but there is overlay magic (VXLAN or GENEVE) which helps to establish the connectivity between the docker nodes (Node can be a VM or Physical server or any cloud instance). In this example, we are going to cover all the networking concepts required to allow the Containers (yellow) to communicate with each other and also with existing VLAN’s/outside world. VMware developed the compute stack and later storage (VSAN) and Network (NSX-v & NSX-T). Similarly, Docker focused to develop compute stack but later developed a nice plugin-based Network stack for easy deployments.

Docker refers to the CNM (Container Network Model) and Kubernetes refer to CNI (Container Network Interface) with more network enhancements. Libnetwork is the code behind the networking magic and Drivers like Overlay, MACVLAN, IPVLAN & Bridge are native with Docker installation. At a high level, we can classify Docker networking into three sections.

Single Host Networking

Multi-Host Networking (Docker Swarm)

User-defined Networking

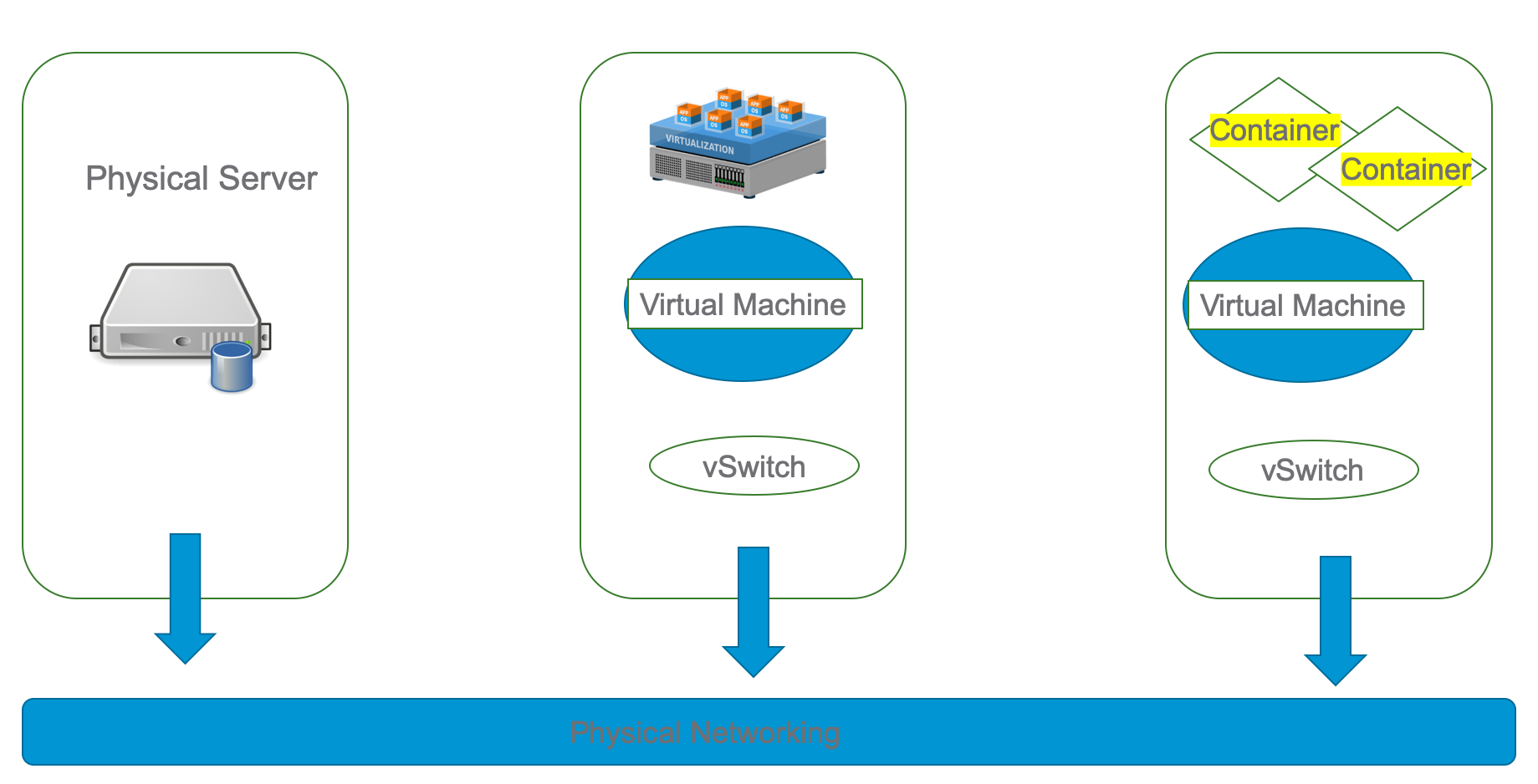

Single Host Networking:

When you install Docker, it creates three networks automatically including the bridge network. We can refer bridge network as single host networking as containers attached to the bridge network can communicate with each other by IP without any additional configuration or steps. The default bridge network is present on all Docker hosts. If you do not specify a different network, new containers are automatically connected to the default bridge network. Run the following two commands to start two busybox containers, which are each connected to the default bridge network.

$ docker run -itd --name=container1 busybox 3386a527aa08b37ea9232cbcace2d2458d49f44bb05a6b775fba7ddd40d8f92c $ docker run -itd --name=container2 busybox 94447ca479852d29aeddca75c28f7104df3c3196d7b6d83061879e339946805c

Containers connected to the default bridge network can communicate with each other by IP address. Docker does not support automatic service discovery on the default bridge network. If you want containers to be able to resolve IP addresses by a container name, you should use user-defined networks instead. From inside the container, use the ping command to test the network connection to the IP address of the other container as shown in the below example.

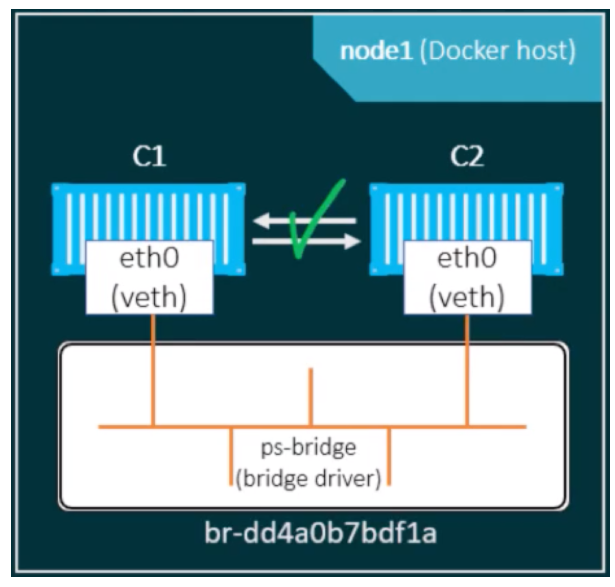

Multi-Host Networking:

Overlay driver is going to help us to create the networks between the nodes and swarm mode is the key term for using it. We can cover the swarm service networks in this section as user-defined networks can cover the different models with the swarm.

A Docker swarm generates two different kinds of traffic:

- Control and management plane traffic: This includes swarm management messages, such as requests to join or leave the swarm. This traffic is always encrypted.

- Application data plane traffic: This includes container traffic and traffic to and from external clients.

The following three network concepts are important to swarm services and followed by an overlay network example:

- Overlay networks manage communications among the Docker daemons participating in the swarm. You can create overlay networks, in the same way as user-defined networks for standalone containers. You can attach a service to one or more existing overlay networks as well, to enable service-to-service communication. Overlay networks are Docker networks that use the overlay network driver.

- ingress network is a special overlay network that facilitates load balancing among a service’s nodes. When any swarm node receives a request on a published port, it hands that request off to a module called IPVS. IPVS keeps track of all the IP addresses participating in that service, selects one of them, and routes the request to it, over the ingress network.

- The ingress network is created automatically when you initialize or join a swarm. Most users do not need to customize its configuration, but Docker 17.05 and higher allows you to do so.

- docker_gwbridge is a bridge network that connects the overlay networks (including the ingress network) to an individual Docker daemon’s physical network. By default, each container service is running is connected to its local Docker daemon host’s docker_gwbridge network. The docker_gwbridge network is created automatically when you initialize or join a swarm. Most users do not need to customize their configuration, but Docker allows you to do so.

User-defined Networking:

It is recommended to use user-defined bridge networks to control which containers can communicate with each other, and also enable automatic DNS resolution of container names to IP addresses. Docker provides default network drivers for creating these networks. You can create a new bridge network, overlay network or MACVLAN network. You can also create a network plugin or remote network for complete customization and control. You can create as many networks as you need, and you can connect a container to zero or more of these networks at any given time. In addition, you can connect and disconnect running containers from networks without restarting the container. When a container is connected to multiple networks, its external connectivity is provided via the first non-internal network, in lexical order.

Bridge networks:

A bridge network is the most common type of network used in Docker. Bridge networks are similar to the default bridge network, but add some new features and remove some old abilities. A bridge network is useful in cases where you want to run a relatively small network on a single host. You can, however, create significantly larger networks by creating an overlay network.

The docker_gwbridge network:

The docker_gwbridge is a local bridge network which is automatically created by Docker in two different circumstances:

- When you initialize or join a swarm, Docker creates the docker_gwbridge network and uses it for communication among swarm nodes on different hosts.

- When none of a container’s networks can provide external connectivity, Docker connects the container to the docker_gwbridge

Overlay networks in swarm mode:

You can create an overlay network on a manager node running in swarm mode without an external key-value store. The swarm makes the overlay network available only to nodes in the swarm that require it for a service. When you create a service that uses the overlay network, the manager node automatically extends the overlay network to nodes that run service tasks.

An overlay network without swarm mode:

If you are not using Docker Engine in swarm mode, the overlay network requires a valid key-value store service. Supported key-value stores include Consul, Etcd, and ZooKeeper (Distributed store). Before creating a network in this way, you must install and configure your chosen key-value store service. The Docker hosts that you intend to network and the service must be able to communicate. Docker Engine running in swarm mode is not compatible with networking with an external key-value store.

Conclusion:

There are multiple ways to create the Docker networks but based on the use case and customer requirements, we need to choose the appropriate model. We can’t separate the swarm mode from the highly available applications and user-defined networks are handy to meet the customer networking requirements. Please feel to refer to the docker official page about the docker networking.

“Be social and share this on social media, if you feel this is worth sharing it”