In our last post, we explored the ESXi CPU scheduler and why NUMA matters so much for performance. Today, we’re tackling the other half of the equation: memory.

Poor memory management can cripple even the most powerful servers—leading to swapping, slowdowns, and frustrated users. Luckily, ESXi includes some of the most advanced memory management technologies in the industry.

In this guide, we’ll cover:

-

How to right-size VM memory

-

What memory overcommitment really means

-

Why large memory pages matter

-

How to leverage Memory Tiering in vSphere 9.0

Let’s dig in. 🚀

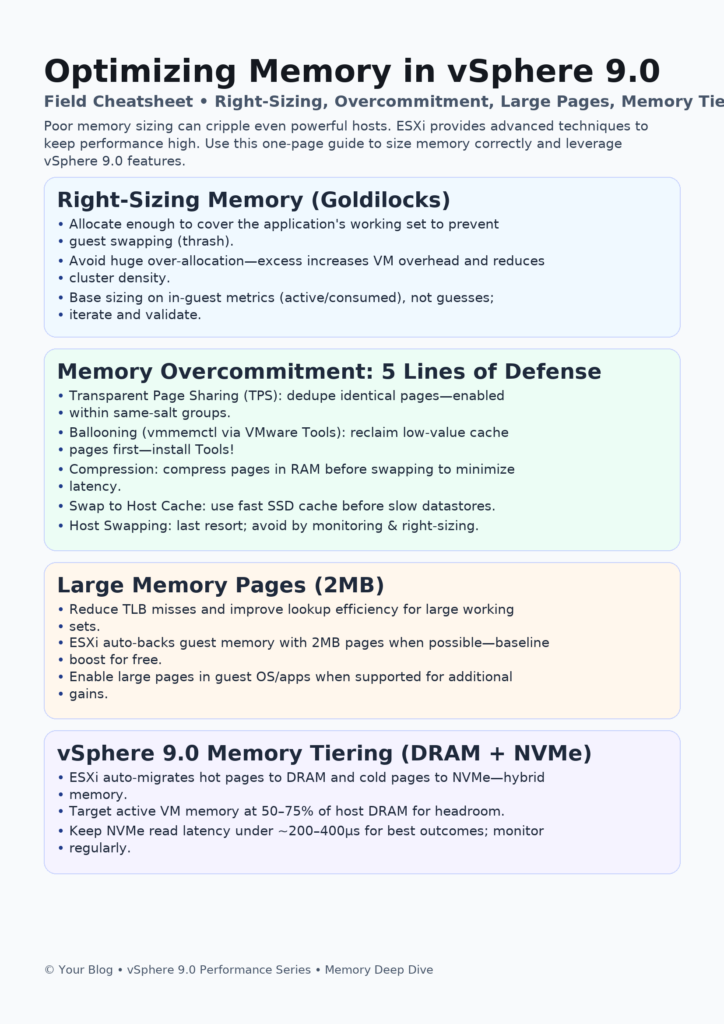

🎨 “vSphere 9.0 Memory Performance Cheatsheet”

🧠 Memory Sizing: The Goldilocks Principle

Getting memory sizing right is all about balance.

-

Under-Allocating = Disaster: If VMs don’t have enough memory, the guest OS will start swapping to its own virtual disk. This kills performance.

-

Over-Allocating = Waste: Giving too much memory increases VM overhead on the host. That memory can’t be reclaimed, reducing VM density.

👉 Rule of Thumb: Monitor actual guest memory usage and size accordingly. Don’t guess.

⚡ The Magic of Memory Overcommitment

One of vSphere’s superpowers is its ability to safely allocate more total memory than physically available. ESXi uses a 5-layered approach:

-

Transparent Page Sharing (TPS): Deduplicates identical memory pages.

-

Ballooning (via VMware Tools): Safely reclaims guest OS file cache memory.

-

Memory Compression: Compresses pages instead of swapping.

-

Swap to Host Cache: Uses SSD cache before resorting to slow datastores.

-

Host-Level Swapping (last resort): Avoid this whenever possible.

💡 Tip: Install VMware Tools everywhere. Ballooning only works if Tools are present.

📏 Leveraging Large Memory Pages

Modern CPUs support 2MB “large pages” alongside standard 4KB pages.

-

Why it matters: Fewer TLB misses, more efficient memory lookups.

-

Automatic in ESXi: vSphere backs guest memory with 2MB pages when possible.

-

Best Practice: Configure guest OS + apps to use large pages where supported for extra gains.

🚀 New in vSphere 9.0: Memory Tiering

The headline feature: Memory Tiering.

-

How it works: Combines fast DRAM with a large NVMe tier.

-

Hot data → DRAM.

-

Cold data → NVMe.

-

ESXi does the movement automatically.

Best Practices:

-

Keep active VM memory ≤ 50–75% of DRAM.

-

Ensure NVMe device read latency stays below 200–400µs.

🔮 Up Next in the Series…

We’ve tackled CPU and Memory—but performance is nothing without I/O.

In the next article, we’ll explore:

-

Virtual disk types

-

Multipathing strategies

-

VAAI & NVMe performance boosts

Have you battled a memory performance issue in vSphere? Drop your war stories & questions in the comments 👇